Introduction

AI based tools have rapidly evolved from novelties rife with speculation, into powerful assets that can’t be ignored. In the last decade, large advances have been made in the form of neural network based and node-based thinking in AI programs. Perhaps even more importantly, the advent of Generative Adversarial Networks (GANs) in 2014, has greatly enhanced AI’s ability to produce realistic content by using competing neural networks to both generate content and discriminate between real and generated content that the networks produce. By allowing the networks to compete with themselves, GANs provide a way for AI tools to learn from their own interactions and generations and improve without human input.

As the process continues to be refined, every sub-culture and industry stands both to gain and to change alongside this far reaching technology and the arts are no different. As we generated content and explored the use of popular commercially available AI tools in class, most of which use GANs as part of their process to generate such realistic content, I began to see this potential in the arts for myself. Neural network based AI tools have the ability to create extremely intricate and detailed works across a wide variety of mediums and I found that both impressive and sobering. Almost anything that I could think of could be generated and the prompt slowly refined to produce a result that satisfied my immediate desires.

However, looking over the results, I never felt a sense of ownership or responsibility for the content that was being created. Gone was the indescribable satisfaction of creating something that only you could have willed into existence, instead my prompts began to feel like the descriptions of an artists commission. A list of do’s and don’ts that merely served as a guideline for someone elses work.

In this case, a machines.

As similarities and common themes began to develop in the images I was generating, I began to seek ways of putting as much of the creative power and expression in my own hands as I could. Early in the course, we were instructed on the process of creating a customGPT inside ChatGPT, and this proved to be our first opportunity to return some of that creative power. Through our world building exercise, many of us were able to input enough artistic control into our generations to successfully generate a series of images that plausibly seemed to be from the same realm or universe, but it was not enough. I still felt like a bystander, watching over the shoulder of some great artist as they meticulously brought life to the words and descriptions of a rambling muse.

For my final project, I decided to take my pursuit of deeper personal connection with AI generated art to the roots, and examine the process as a whole in order to gain an understanding of how to create truly unique works through AI-tools. I wanted to be able to find a way to produce AI content based solely on the specific chosen content of my choosing and in order to be able to do that, I needed to understand how image generation through AI worked on a deeper level.

How an image is generated

Beginning my process of discovery involved asking ChatGPT for resources and explanations of the entire process of generating images through an advanced GANs based neural network from beginning to end. After receiving several pages of information as well as links provided by ChatGPT for further education on the subject, I was able to break down the process into several key steps that gave me a better idea of how images are generated.

First a neural network, called a transformer, that is specifically designed for understanding text and images is trained on a large dataset of images and their corresponding descriptions. These images are usually mined from the publicly available internet and most of the transformer’s text-based learning is obtained from examining alt codes in the html of the websites that correspond with the images. This process allows the transformer to not only assemble a large library of images, but through the alt codes that detail these images, be able to understand and describe them in human language.

This allows the transformer to break down user-based text prompts into instructions that can dictate the theme, objects in scene, context, and other valuable information. These instructions are referred to as tokens and are commonly stored as words or parts of words in the AI database. These tokens then go through semantic analysis to help the machine comprehend their meaning. After this stage of comprehension, the model then extracts features from these tokens that dictate the images in the scene and their placement. This semantic analysis also helps the AI model to be able to infer to broader contexts or other elements that may be implied by the words and further increase the complexity of its generations. These features are then weighted for emphasis in the generation by algorithms referred to as attention mechanisms, with more prominently described features receiving a heavier weight that instructs the transformer to centralize and spend more time rendering these elements.

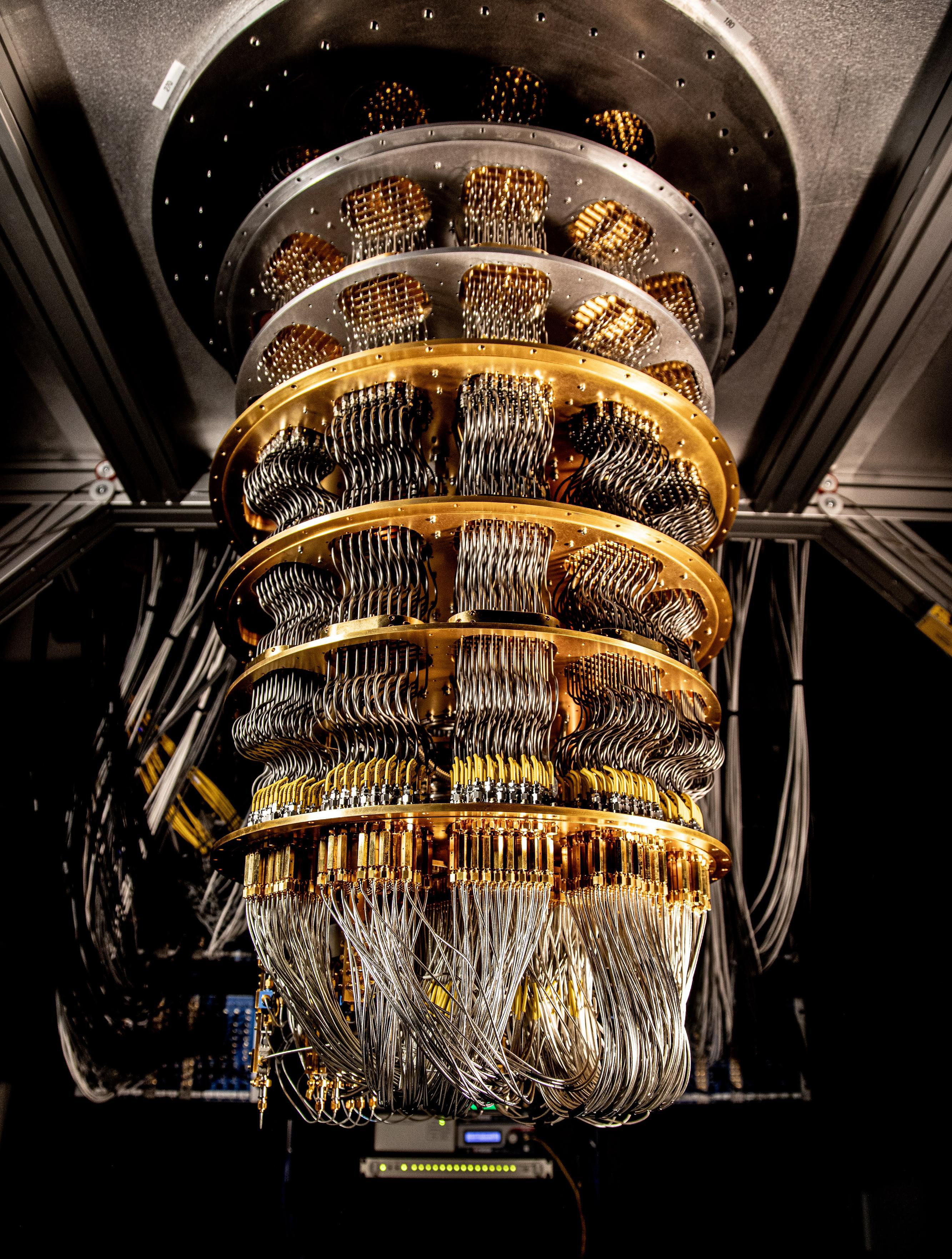

After the transformer has fully analyzed the prompt, it begins its image generation process. Modern day Generative Adversarial Networks generate these images by manipulating points in a latent space, which is a complex way of saying that it compiles a list of tokens and features that correspond to the image prompt and then decode them into an image. Midjourney starts to interpret this information as patterns of random noise. Through thousands of re-iterations, it gradually denoises the image until it matches the proper descriptions in the data supplied by the tokens and features in a process referred to as diffusion. The image then goes through a refinement process that adjusts color, enhances detail, and properly positions elements based on physics and perspective. This image is then tested through a GANs for realism, adherence to prompt tokens and features, and many other quality standards such as clarity, composition, and realism before being presented to the user.

Experimentation and Application

After developing a rudimental knowledge of the process of AI image generation, I began a search for tools and resources that could help me get started on this process for myself. Through query with ChatGPT and basic search engines I discovered many resources that both illustrated this process as well as providing options to build my own custom datasets to run through this image generation process.

The first and most accessible of these that I experimented with is RunwayML’s custom image generator. This service allows you to upload images to be used in a custom dataset that are then used to generate unique images. Personal research and a conversation with ChatGPT revealed that the images uploaded into this gallery are analyzed for their features and style and have this information extracted in the form of tokens which can then be combined with RunwayML’s existing styles or left un-altered for generations.

I uploaded a small gallery of drastically different psychedelic themed images into this generator with the goal of providing a minimal but heavily diversified dataset in order to hopefully notice sharp contrasts and correlations between generations and provide evidence of unique imagery.

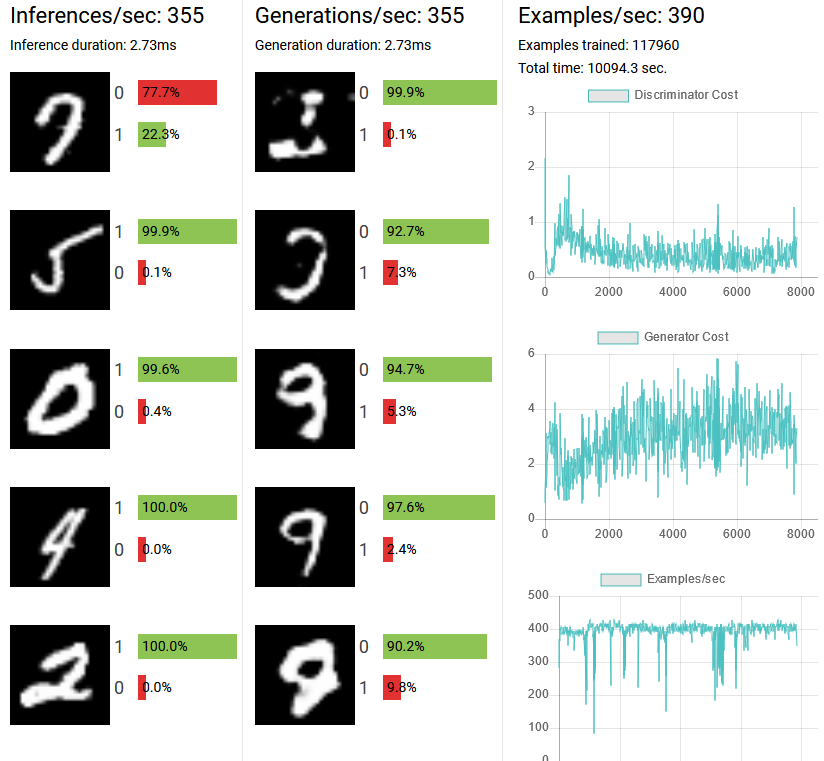

The second experiment involved a GAN simulation available on the web called GAN-Playground. This website allows the user to manipulate variables commonly used as parameters and elements in the code without requiring a background in machine learning or deep learning techniques in python and other coding languages. By changing the architecture of the generator and discriminator networks, I was able to see in real-time how a modern-day AI system processes and generates images.

After exploring these two techniques and tools, it quickly became apparent what my logical next steps would have to be in order to pursue these concepts further. I began to hit roadblocks in the form of machine learning and python-based models that I did not understand and was disappointed to find that open-source custom datasets and neural network GANs required a firm understanding of these subjects. Hopefully once I have progressed further along in my courses, I will be able to revisit this topic and try once more to develop unique imagery from a custom dataset.