Back in high school I gave myself a challenge where I would take a random photo of something everyday. I failed and only took about 40 photos total. Some of the photos were of my friends which was very nostalgic to see. Other photos were of random things like studying in class or just a chair in the dark. The photos I liked gave off a artistic touch that really resonates with me. I feel that to other people it looks like a random photo but to me it feels like art.

For this exercise I wanted to see if I could recreate some of those photos using AI tools. My initial plan was to use DALL-E and just chat and give it descriptions of the photo until it matched which did not really work well. This might be because of the prompt I gave it. I was never good with words. So I changed my approach. I asked Midjourney to describe my image. Then I would take that prompt, adjust it and give it to DALL-E. This also did not work. So I settled with just uploading the actual picture and try to get it as close as possible. I ended up comparing how DALL-E and Midjourney recreated an image.

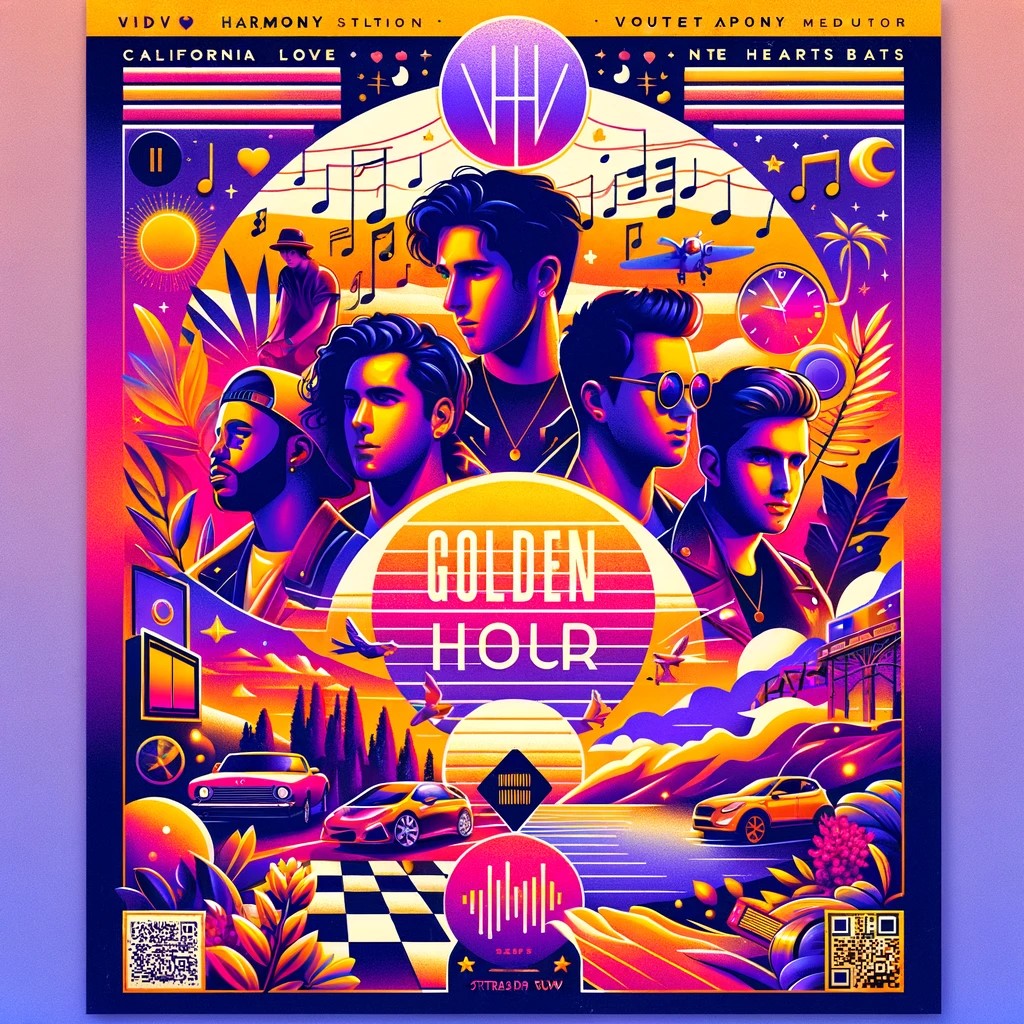

Between the two AI tools there are major differences on how they recreated the image. For some reason in all of the photos MidJourney always added people into the photo. For DALL-E the art style was always trying to be realistic but you could tell it was a bit cartoony. In the end, this was a unique experience that will make me question who to go to first when I want to make a visual.

https://docs.google.com/presentation/d/14bnjMBotRvlEvhiEdW0NNfZnKJvqzfHQqGS6-MJqA8c/edit?usp=sharing