To Do This Week

Weather App DUE this Wednesday, April 16th

HTML5 Game NOW DUE Monday, April 14th

P5js Project (5%)

Due April 16th

Create a generative animation using p5.js that reflects your personal style and curiosity. Your sketch should include animation, randomness or Perlin noise, and pattern logic. You may use AI for assistance, but things will only get really interesting if your dig in and play with the code. Mistakes sometimes reveal interesting results. Ask for good comments in the outputs.

You can use the p5js editor to work out your script, but you should submit to Canvas and HTML page called index.html inside a folder called “p5js.”

You will need to link to the p5js library in the head. Use a <script> with: src=”https://cdnjs.cloudflare.com/ajax/libs/p5.js/1.9.0/p5.min.js”

Requirements:

-

Include randomness (

random()ornoise()) -

Include some kind of animation or interaction

-

Include logic steps that describes your idea as comment in the code.

This is about play, remix, and experimentation!

Generative Art with P5js

Processing Overview

P5js (Processing for JavaScript) is a library that makes it easy to create complex drawing and animations using Canvas and additional built-in Processing methods. Below are resources and a which includes the p5js file. Look at theses resources in order to see how p5js works.

p5js.org/ : processing > javascript for fun and art, creative programming

P5js Methods

Animation Loop

- setup(): Runs once at start. No need to call function!

- draw(): Runs repeatedly every frame. No need to call function!

- frameRate(fps): Sets framerate. [framerate()]

- noLoop() / loop(): Stops or resumes looping. [noLoop], [loop]

Canvas & Setup

- createCanvas(w, h): Creates a canvas.

- background(val): Sets background color.

- smooth() / noSmooth(): Enables/disables anti-aliasing. smooth() is default [smooth], [noSmooth]

Drawing & Shapes

- line(x1, y1, x2, y2): Draws a line.

- circle(x, y, diameter): Draws an ellipse.

- ellipse(x, y, w, h): Draws an ellipse.

- arc(x, y, w, h, start, stop, [mode]): Draws part of an ellipse. HALF_PI, PI, PI + HALF_PI, TWO_PI [arc()]

- rect(x, y, w, h): Draws a rectangle.

- fill(r, g, b, [a]) / noFill(): Sets or disables fill. [fill], [noFill]

- stroke(r, g, b) / noStroke(): Sets or disables stroke. [stroke], [noStroke]

- strokeWeight(w): Sets stroke thickness

Input (Mouse & Keyboard)

- mouseX, mouseY: Mouse position.

- mousePressed(), mouseMoved(): Mouse events. [mousePressed], [mouseMoved]

- keyPressed(), key, keyCode: Key events and values. [keyPressed], [key]

Math & Vectors

- random(min, max): Random number in range. [random()]

- p5.Vector: Class for vector math. A vector can represent a position, a velocity, a direction, or a force [p5js.Vector]

Utilities

- saveCanvas(): Save canvas as image. [saveCanvas()]

- millis(): Time since sketch started (ms). [millis()]

- text(“string”, x, y)

Full reference: https://p5js.org/reference

P5js Starter

- p5js shapes

- p5js square grid

- p5js- animating with framecount

- p5js drawing

- p5js velocity

- p5js bouncing balls

- p5js online editor

- p5js html starter template

P5js resources

- p5js examples

- p5js overview

- p5js reference

- p5js video tutorial

- how to code generative art with p5js

- p5js showcase

Weather Apps + P5js

function setup() {

createCanvas(600, 400);

fetch('https://api.openweathermap.org/data/2.5/weather?q=London&appid=YOUR_API_KEY')

.then(response => response.json())

.then(data => {

weatherData = data;

})

.catch(err => console.log(err));

}

function draw() {

background(220);

let mainWeather = weatherData.weather[0].main; // e.g., "Rain", "Clear"

if (mainWeather === "Rain") {

drawRainPattern();

} else if (mainWeather === "Clouds") {

drawCloudPattern();

} else {

drawGenericPattern();

}

}

Generative Art

-

Algorithmic/Rule-Based: Setting up strict rule systems to generate visual outputs.

-

Randomness & Noise: Introducing randomness (e.g., Perlin noise) to simulate organic or chaotic patterns.

-

Iterative / Recursive Approaches: Fractals and other self-similar patterns, such as recursive subdivisions.

generative art Examples

- Game of Life (P5js simultaion)

- Openprocessing.org – community of generative artists.

- Amy Goodchild | Amy Goodchild: What is generative art?

- Tyler Hobbs | Fidenza series (flow fields)

- Emily Xie

- Joshua Davis

- Raven Kwok

- Kjetil Golid

Generative Artists

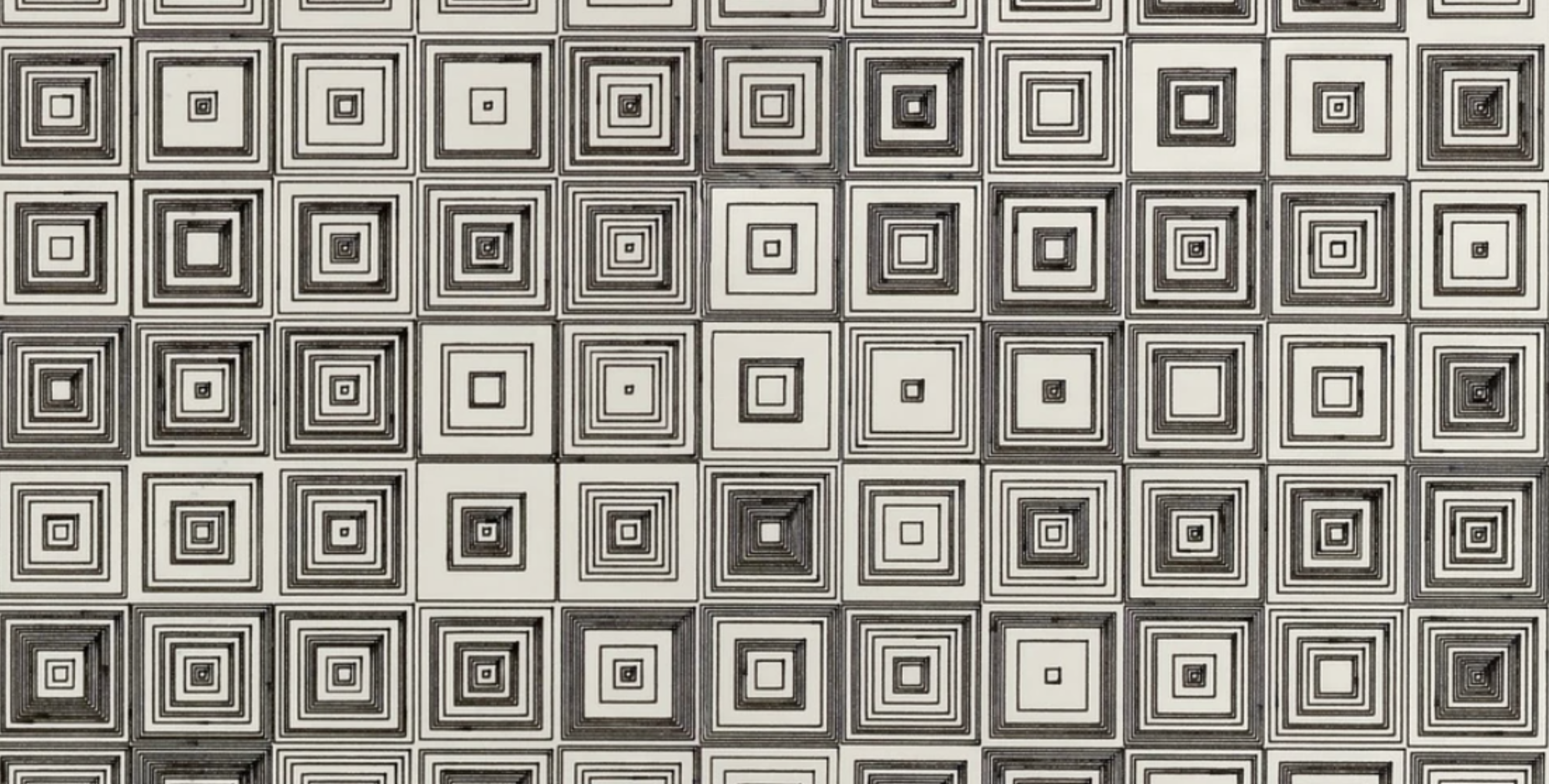

Vera Molnar

Vera Molnár (b. 1924) is a Hungarian-French artist often recognized as one of the earliest pioneers of generative and computer-based art. In the late 1960s, Molnár began systematically using computers—an uncommon practice at the time—to explore geometric forms and algorithmic variations. She embraced rule-based processes to create series of drawings that reveal slight, methodical permutations in composition. Her work anticipates generative art and processing: how simple computational rules can generate nuanced aesthetic complexity.

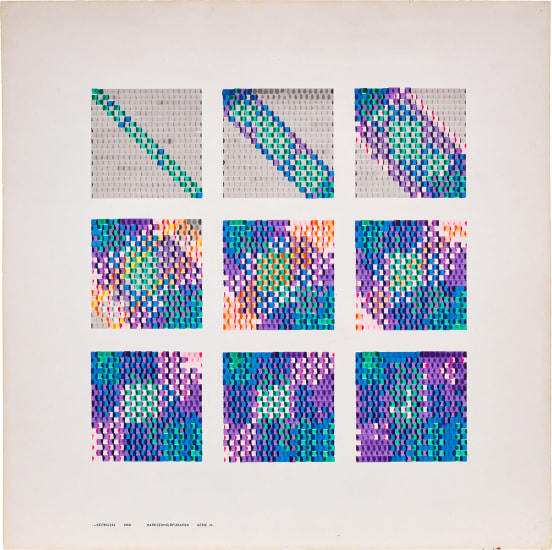

FRIEDER NAKE

Another key figure in early computer art who pioneered algorithmic and generative methods, Frieder Nake began creating computer-generated drawings in the 1960s. His work often explores mathematical and logical structures to produce abstract compositions, reflecting on the relationship between art, mathematics, and computation.

CASEY REAS

Co-founder of Processing (alongside Ben Fry), Casey Reas is deeply involved in computational and procedural art. His artwork blends organic forms generated by algorithmic rules, pushing the boundaries of what code-driven aesthetics can achieve. He has also curated numerous exhibitions and publications that bridge art, design, and technology.

ZACH LIEBERMAN

An artist and educator known for interactive installations and playful, poetic use of code. Zach Lieberman experiments with the boundaries between the body, gesture, and computational expression. He frequently shares works-in-progress on social media and open sources many of his creative coding projects, making his process accessible to the broader community.

zachlieberman.com

Instagram/Twitter: @zach.lieberman (Instagram)

SOUGWEN CHUNG

Combines AI, robotics, and hand-drawn lines in her practice, exploring the interplay between human creativity and machine-generated gestures. Sougwen’s performances and installations often feature collaborative drawing sessions with robots (guided by AI), raising questions about authorship, intimacy, and the evolving relationships between humans and intelligent systems.

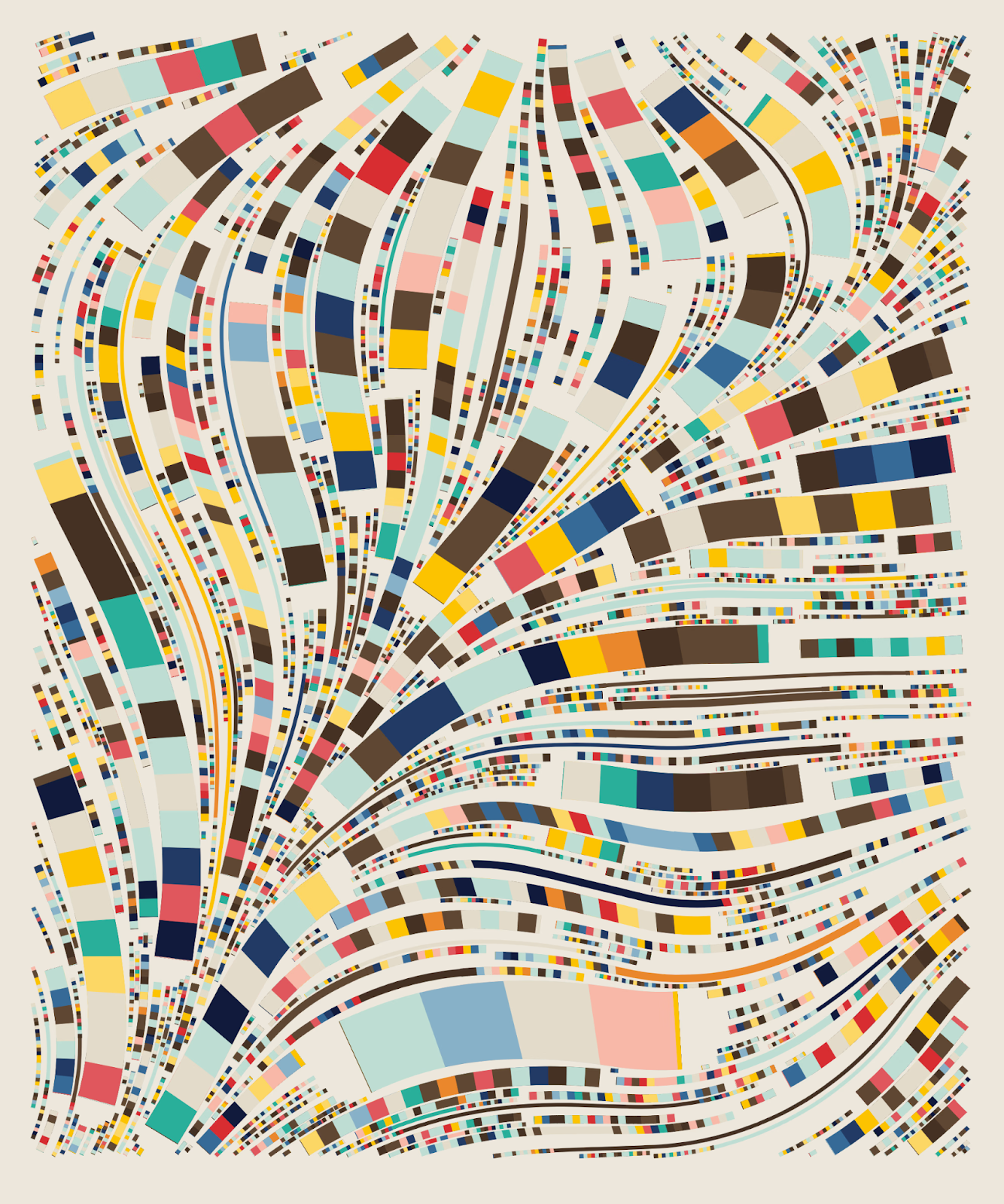

TYLER HOBBS

Well-known within the crypto art and NFT space—particularly for the Fidenza series on Art Blocks—Tyler Hobbs applies algorithmic processes to yield unique abstract compositions. His work is often lauded for blending chance with control, resulting in outputs that feel at once organic and meticulously designed.

tylerxhobbs.com

https://www.tylerxhobbs.com/works/qql#info

Advanced Generative Art Techniques

Flow Fields

A grid-based technique where each cell contains a vector determining the direction of particle movement. By populating the field with small agents or particles, artists generate organic, undulating patterns that resemble fluid, wind, or magnetic currents. Subtle adjustments to vector generation—often via noise—produce continuously evolving, visually engaging animations.

example: https://will-luers.com/DTC/dtc338-AI/javascript-p5js-flowfields.html

prompt: “Write a p5.js sketch that creates a flow field using Perlin noise, where 100 particles move according to the flow vectors. The sketch should visualize the paths in real time.”

Perlin Noise & Noise-Based Techniques

The random() function in p5.js generates pseudorandom numbers. It can return a floating-point number between 0 and 1, a number within a specified range, or a random element from an array, depending on how it’s used. It’s useful for introducing unpredictability and variation in sketches.

The noise() function in p5js) uses Perlin noise: a smooth, gradient-based form of randomness that avoids abrupt changes and is often used to add natural variation in movement, color, or shapes. By sampling noise at various scales or “octaves,” artists produce effects of terrains, clouds, or fluid dynamics. Controlled yet unpredictable.

prompt: “Generate p5.js code that draws a 2D Perlin noise field in grayscale, continuously updating over time to look like clouds.”

Particle Systems

Particle systems involve numerous small entities (particles) that each follow simple behavioral rules, typically with an emitter releasing new particles over time. By combining forces like gravity, drag, and random variation, these systems can simulate natural phenomena (like fire or smoke) or produce dense, mesmerizing compositions that evolve frame by frame.

prompt: “Create a p5.js sketch of a particle system where new particles are emitted from the bottom center of the screen and rise upwards, fading out over time.”

Boids / Flocking

Originating from Craig Reynolds’s model of bird flocking, boids follow rules of separation, alignment, and cohesion to create emergent group movement or murmuration. Even with minimal constraints, the resulting swarming, flocking, or schooling visuals evoke lifelike patterns and make for captivating, dynamic scenes.

prompt: “Write a p5.js sketch implementing boids flocking with 50 boids. They should avoid overlapping, try to move with neighbors, and stay within the canvas area.”

Fractals & Recursive Structures

Fractals use recursive functions or iterative processes to reveal self-similar patterns across multiple scales. Techniques like L-systems (Lindenmayer systems ), the Mandelbrot set, or tree recursion create visually infinite layers of detail and demonstrate how simple equations can generate hypnotically complex imagery.

prompt: “Generate a p5.js fractal tree sketch that uses recursion to draw multiple branches from each node, with sliders to control branch angle and depth.”

Cellular Automata

A grid-based system where each cell transitions between states based on rules involving its neighbors. Conway’s Game of Life is the classic example, showing how seemingly complex organisms can arise from straightforward conditions. Artists extend these concepts to create evolving abstract patterns and even interactive art pieces.

prompt: “Create a p5.js version of Conway’s Game of Life with a grid of 50×50 cells, using random initialization, and animate each generation.”

Data Visualization & Sonification

Turning data sets into visual or auditory experiences. This can range from straightforward charts to immersive representations where color, position, shape, or sound encodes underlying information. It blends aesthetic sensibilities with analytical rigor to offer deeper insights into complex data.

prompt: “Create a p5.js data visualization of US debt over 50 years.”

Generative Art In-class Exercise

Play with the advanced techniques above. You can use/alter the sample prompts to get started.

p5.js Advanced Methods for Generative Art

Shape & Path Manipulation

- beginShape() / endShape(): Start and finish a custom shape. [beginShape() endShape()]

- vertex(x, y): Define a point in a custom shape. [vertex()]

- curveVertex(x, y): Similar to vertex(), but creates smooth curves. [curveVertex()]

- bezierVertex(): Adds Bézier control points to your shape. [bezierVertex()]

Color & Style

- lerpColor(c1, c2, amt): Blend two colors by a value between 0 and 1. [Reference]

- colorMode(mode, [max1], [max2], [max3], [maxA]): Switch between RGB, HSB etc. [Reference]

- blendMode(mode): Set how shapes blend (e.g., ADD, MULTIPLY). [Reference]

Math & Vectors

- random(min, max): Random float in range. [random()]

- map(val, inMin, inMax, outMin, outMax): Maps a value to another range. [map()]

- dist(x1, y1, x2, y2): Distance between two points. [dist()]

- p5.Vector: Class for vector math. [p5js.Vector]

Transformations

- push() / pop(): Save and restore drawing style and transforms. [Reference]

- translate(x, y): Move origin to new location. [Reference]

- rotate(angle): Rotate the drawing context. [Reference]

- scale(s): Scale the drawing context. [Reference]

Math & Noise

- noise(x, [y], [z]): Perlin noise (smoother randomness). [Reference]

- lerp(start, stop, amt): Linearly interpolate between values. [Reference]

- constrain(val, min, max): Restrict value between a min and max. [Reference]

Pixels & Image

- loadPixels() / updatePixels(): Directly access and modify canvas pixels. [Reference]

- get(x, y): Reads pixel color at position. [Reference]

- set(x, y, color): Sets pixel at position. [Reference]