Cloud Castles & Robot Fae:

Prompt 1 - Written by Me:

“An image of fluffy clouds with metal, industrial style castles sitting upon them. The clouds look touchably soft like floating marshmallows. There is a lot of greenery and climbing plants like ivy. Tiny robot-pixies inhabit these castles and clouds with their cyberpunk and fantasy elements combined. There is whimsical decor all around like macaroons for seat cushions. This should be shown in a realistic style. The aspect ratio of this image should be 16:9. there should be a pastel and dreamy quality to the image.”

It's not a horrible prompt, but it isn't specific enough to the vision that I had in my head. It left out some of the details in my prompt in favor of focusing on other ones. The major detail that I left out completely was the location of this image (the sky), and that is why it did not turn out as I wanted it to. I also had to reiterate to Dall-E 3 that the robot pixies were not optional but required to complete my vision. The language I used to describe them was specific to me and not this AI model, so I think that brought some confusion to what I was asking for.

Revision to Prompt 1:

“Please regenerate this photo and be sure to add in the robot pixies flying around and sitting on the macaroon cushions. The image should also be close up and the background should be of the sky. These castles and their clouds are floating in the sky.”

This revision alone brought me much closer to what I wanted for this image. The AI model did not listen to my specificity about fae/pixie location or what I wanted them to be doing (sitting on the cushions), but it read my mind elsewhere, and that's part of the fun when generating images with AI. I wanted the pixies that existed to look a specific way, and I specified that while drawing in where I wished for them to be located with Dall-E 3's new paintbrush tool. The result was…underwhelming, to say the least.

Revision 2 to Prompt 1:

“Add in dainty, feminine robot fae that contrast the existing robot pixies. These new fae should have pastel-colored metal exteriors and mechanical wings.”

The result was that quite literally nothing was changed in the image. It “regenerated” a photo that looked identical to the last. I think there may have been some sort of glitch, as the new updates to this AI have been causing problems for myself and my peers over the past few weeks. I got a little annoyed and responded to the AI with, “You didn't add anything to this photo at all. Do what I said.” The result was quite different from what was generated previously, but in the best way, although it had an odd border on the left and right sides that I didn't ask for. This was an easy fix, as I used the paintbrush tool and specified that I wanted the image to extend to the edges. A happy accident?

After a bit of back and forth, revision, and specification, I was able to achieve an image that I both wanted and didn't know that I wanted based on my original idea. I was surprised to have achieved this aesthetic and look within just a few tries, as I have had a lot more difficulty with past AI-image generation projects. The takeaway from generating this image is that the new tools, although helpful and cool for some parts of image generation, can mess up or entirely change what you already have (so be careful with them). Use descriptive language to get what you want out of the image. For example, calling the pixies “robot fae” was not enough; I also needed to specify that they had a feminine and “dainty” appearance, as well as continue to make sure that they had their metal exteriors.

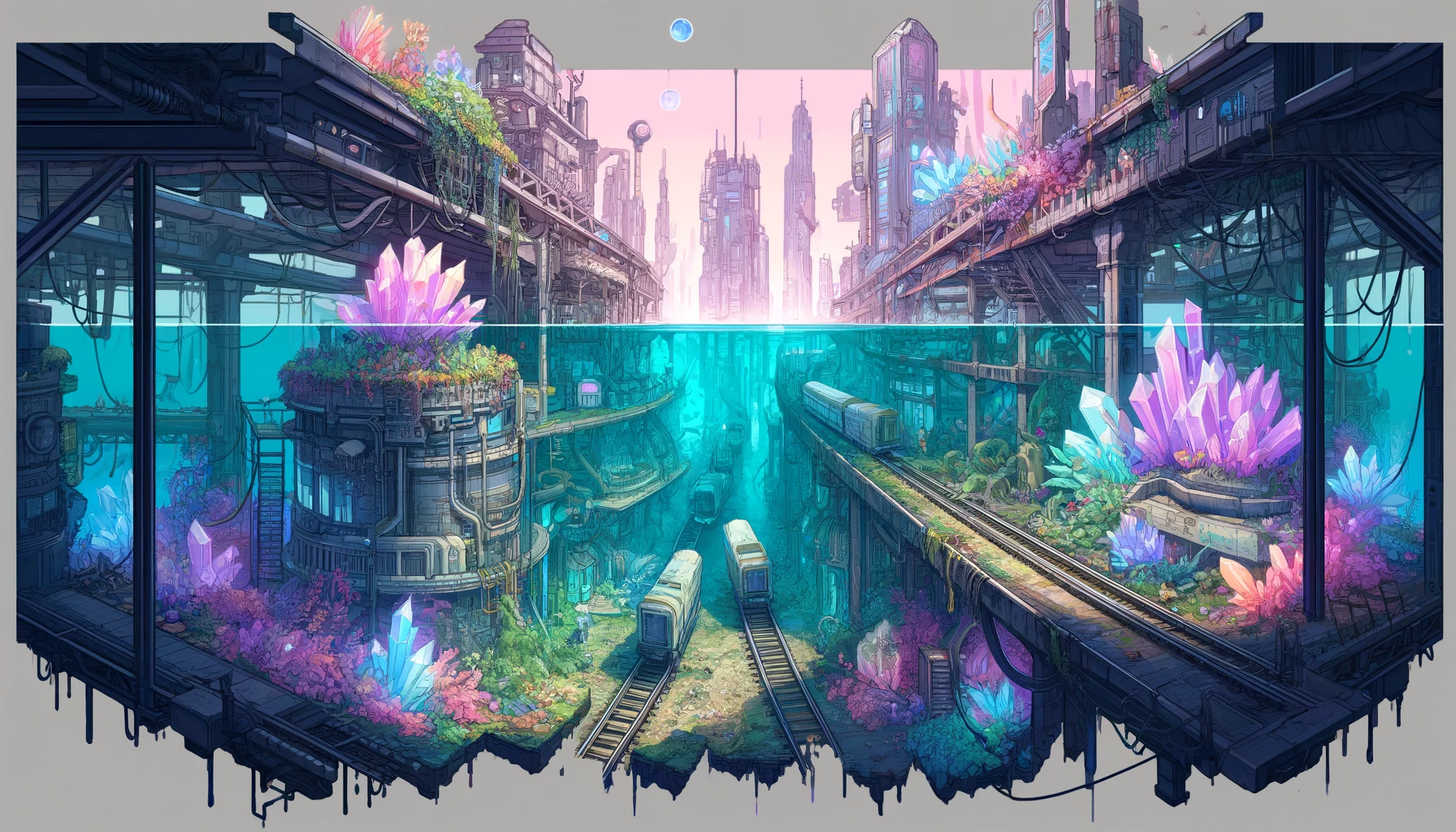

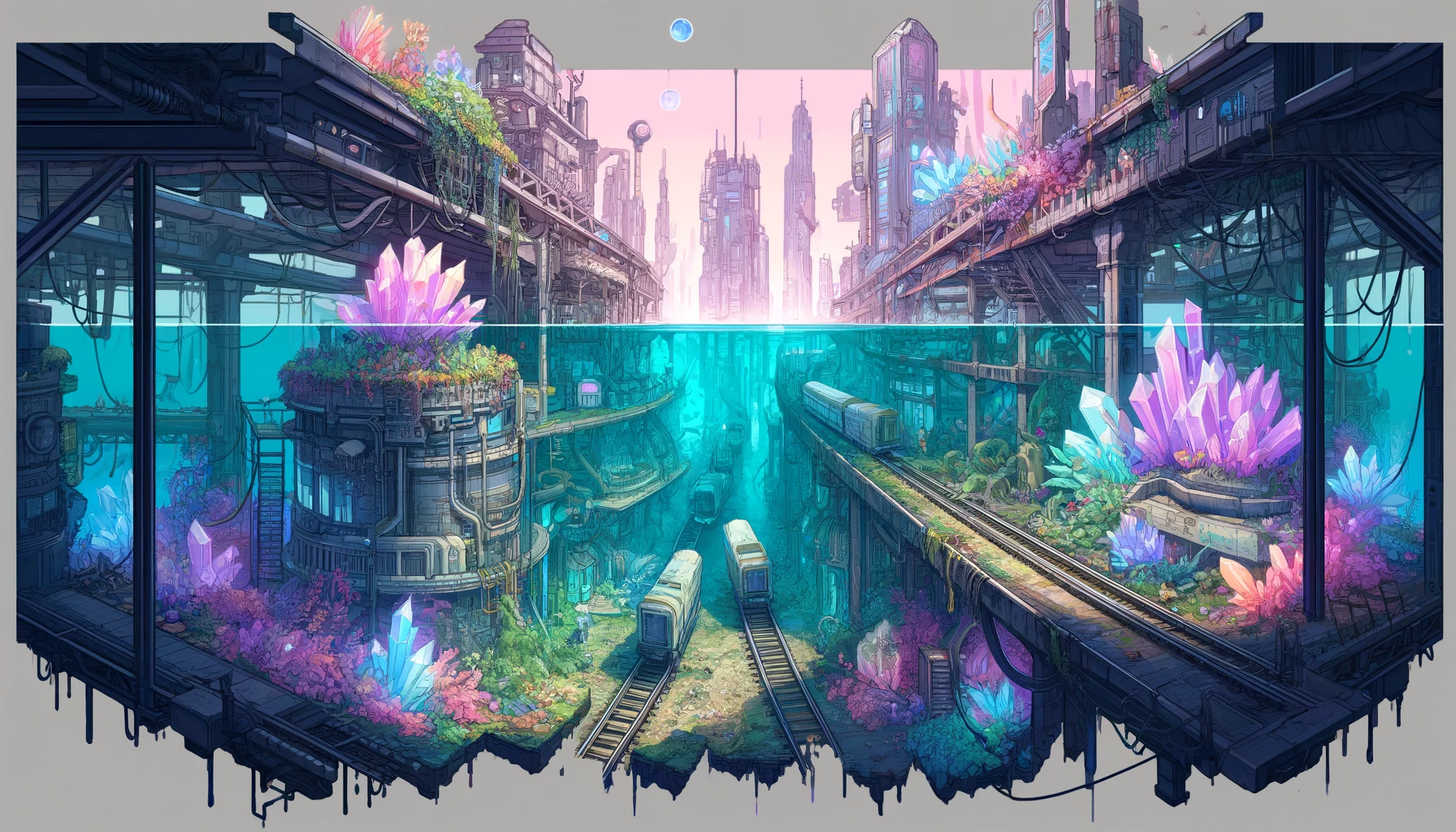

Aliens Gardening Underwater:

Prompt 2 - Written by Me:

“An image of aliens gardening underwater. The plants that they are tending to appear to be other aliens in the garden, almost like cabbage patch kids but instead of humans, they are alien heads coming up from the ground in various stages of maturity. They are also growing crystals to power their underwater, cyberpunk style structures and buildings. You can see the bustling underwater city that they come from in the background. There appears to be a railway system going from the city to the farm and the vehicles on it appear to be cyberpunk style minecarts. There is a lot of seaweed and other underwater plants growing around. This farm and the city have been somewhat lost to time, due to them not being maintained properly over the years and metal is decaying and rusting under the salty water of the ocean.”

With the first image as a kind of “warm up,” I jumped right in to generate the second. This initial prompt is specific, but almost too specific in some ways for this AI model to understand, on top of me using some language that is hard for the AI to understand specifically, the sentence about the “cyberpunk style minecarts.” (I wanted them to have more of a mine cart appearance, but the cyberpunk and railway words overrode what the result was.) I was intentionally non-specific about the appearance of the aliens themselves, as I wanted to see what the AI would come up with for me as filler, and I wanted it to focus more on generating the other aspects of this scene. I was happy with the result and felt like it fit my vision, aside from the cabbage-looking plants in the garden. I wanted those to appear as alien heads, as if they were growing their future citizens in a garden like how Cabbage Patch Dolls are “born.” Focusing heavily on this part of the photo caused me a lot of difficulty.

Revision 1 to Prompt 2:

“Please regenerate this image and make sure that it does not have a border of any kind. The image should fill the entire space. The color palette should have pastel purples, greens, and blues.”

This is where I started to get extra ambitious and tried to make this photo's color palette match that of my first “robot pixie” prompt and image. I felt like if I could make them all look cohesive in that way, it would add to the overall aesthetic of my final project's website, but I decided to sacrifice that for the actual content of the photo for now. The results changed the style and vibe of the images completely in a way that I felt was too far from my original vision, so I decided to go back to that first image for prompt 2 and work with that. First, I had it fill the image to the edges, as it put that weird border on again to fill the 16:9 aspect ratio. Then I simply used the paint brush tool in the garden and asked, “Please change these cabbage-like plants into alien heads.” I encountered the same issue as I did in the first prompt: it didn't do anything. After responding, “Please listen to what I just said. You didn't change anything at all.” My poor attempt at being firm yet polite with the AI resulted in an image that confused me. It mixed together elements from my abandoned pastel-color palette prompts with the image that I liked and made something interesting, but not exactly what I wanted. The AI also missed my entire point of changing the plants into alien heads. I decided to take a different approach.

Prompt 2 New - Dall-E 3's Prompt from the Image that I Liked:

“An image of a vivid underwater scene depicting aliens gardening. The garden consists of alien heads in the ground, buried in dirt, similar to cabbage patch kids, growing in various stages of maturity. The aliens are tending to these plants, as well as crystals that are growing out of ground to power their underwater, cyberpunk-style structures. In the background, a bustling underwater city with decaying and rusting metal structures due to lack of maintenance is visible. A railway system connects the city to the farm, with vehicles resembling cyberpunk minecarts traveling on it. The scene is rich with underwater flora like seaweed, capturing a sense of a lost, ancient civilization in a 16:9 aspect ratio. The color scheme consists of pastel blues, purples, and greens and it gives the viewer a sense of being under water.”

I often do this when I am generating images and want to emulate an AI image generator's language to get a better result. I try to find out what the “hidden” prompt is that they have used to generate the image. This is an advantage that I've found when using Dall-E 3, as I can copy and paste their prompt into the generator and change some of the wording slightly to make an image with the language that they understand. As far as I know, doing this on Midjourney requires a lot more effort and practice with that tool. The resulting image from this prompt fit my vision nearly exactly, from the color palette to the content and composition. As you can see, I added in some other elements for world-building, like the crystals growing out of the ground and specifying that the viewer should feel like they are also under water.

Although the generation of this image did not go by as quickly as the first, I think that it turned out better in the end. Due to my own specificity in the initial prompt, I was able to get the image to come out nearly exactly how I wanted it on the first attempt. Learning how to do this and how to improve my prompts definitely cuts down on the amount of time that it takes to generate images. This helps to prove my point that not just anyone can pick up an AI-image generation tool and immediately know what they're doing with it and instantly get the results that they're looking for. It requires a different type of skill that combines general artistic knowledge and language.

Time Traveler Visits Futuristic Ruins with Cyber Sigils:

Prompt 3 - A Collaboration Between Myself and Dall-E 3:

“A dusty desert scene depicting futuristic ruins. Glowing cyber sigils are carved into the ruins and emit a faint blue light from within. The remnants of robots from times past lie scattered around the beige, sun ridden wasteland. The scene is rich with cracked and discarded technology, covered in dirt and dust, capturing a lost ancient civilization. There is a time traveler from the 1800s in the foreground, wandering about the wasteland and inspecting the deserted items before him. This image has a neutral color palette and is in a 16:9 aspect ratio.”

For this final prompt, I copied and pasted the Dall-E 3 prompt from my second final image and replaced what it was describing with the scene for this new, third image. The first result was not as close to my internal vision as I'd like, so I hit the regenerate button and got an image that was more like what I was looking for.

The first image, while cool, does not contain the robots that I asked for and isn't as visually interesting as I'd like (something that I did not specify in my prompt, to be fair). The regeneration contains all elements that I've asked for and is well put together with its color palette and composition. The time traveler has a similar style of dress to what I was going for, and it correctly emulates the blue color that I wanted from the ruins and sigils.

Being specific and using the language that Dall-E 3 understands from the start paid off, as it generated an image that I could use in a much shorter amount of time. I think that that is the main goal with these new AI tools: to make our jobs easier as humans, but not to overtake them. As with other programs that make artists' lives easier, like Photoshop and Illustrator or other editing and digital art creation tools, AI image generators require a basic level of skill and, most importantly, artistic knowledge to be properly and efficiently utilized.