1.0

DTC 101

Digital Technology

The past thirty years have demonstrated the accelerating rate of disruptive and mostly beneficial effects of “the digital” on business, education, health and medicine, the creative arts, shopping, social interaction, banking, transportation, scientific research, warfare, politics, and government. Digital technology will continue to change, at a very fast rate, every aspect of human life and culture. From these changes, human beings are becoming virtually more connected, safer, and more efficient, but digital technologies also make our embodied lives more dependent on non-human systems and more open to surveillance, cognitive manipulation, and control.

"Digital Technology" is any technological device that functions through binary computational code. American engineers developed digital technology in the mid-twentieth century, basing their ideas on Gottfried Wilhelm Leibniz's system of binary computing. Digital devices include mobile phones, tablets, laptops, computers, HD televisions, DVD players, communication satellites, etc. The hardware that make up all of these devices include a tiny computer called a microprocessor, which performs calculations on digital code and then makes decisions based on the results. Memory chips store digital information while it is not being processed. Software, instructions in the form of digital code, is used to control the operations in many devices that use digital technology.

This chapter focuses on the core elements of digital technology: digital code, computer hardware and computer software. The aim is not to cover every detail of how computers work, but to examine the materiality of "the digital" in order to better understand how these technologies process and store information for human purposes. What humans do with this technology is digital culture.

1.1

Digital Data

Most acts of communication expend energy through the material involved in the making and sharing of signs. Spoken words are sound waves made from pushed air through various mouth formations. Writing with a pen requires the energy to move the pen on paper, but also the energy needed to manufacture and distribute writing implements.

A text message sent to a friend is made of digital code. In this case, the materiality of code is the hardware and software of a computer device. The energy to send a text message is the electrical expenditure to compute, process, store, and transmit discrete symbols. Even though a snippet of digital code is nearly as weightless as a thought, it takes an enormous amount of electrical energy to run the hardware and software on a global 24/7 internet. The alphanumeric symbols typed into and sent from a phone are translated into digital code by the device and then organized into packets of data that travel the Internet to another device. Despite the ease with which this happens, there may be material interruptions. Weather, blackouts, geopolitical problems can cause delays in putting the pieces of a message back together on the receiver’s device screen. There is a thickness of human labor and natural resources involved in the transmission of digital data.

The word digital means discreet and discontinuous, like the digits used to count by hand. Today, the word commonly means data that is encoded in binary form. Alphanumeric symbols are also made of discrete and discontinuous units, so representing them digitally is much simpler than trying to encode continuous or analogue waves to digital - the sound waves of voice or music, for example.

Binary Numbers

Representing Numbers and Letters with Binary:

Crash Course Computer Science #4

All of the images, sounds and words stored on a digital computer are encoded with two binary digits or bits: "0" and "1". Humans initally started counting with the decimal system or base 10, because of our 10 fingers. But there are other number systems for different purposes. Computers count in base 2, or binary, because they use transistors to store information, which only have two states: "on" or "off." A binary string of eight bits, called a

With binary counting, instead of counting up to 9 as in the decimal system, we only count to 1 before we have to add another digit in front of the first digit. So 0 is "0", 1 is "1", but then 2 is "10" because we have reached the maximimum first digit in base 2.

ASCII: Numbers and Letters in Binary

American Standard Code for Information Interchange (or ASCII for short) is a universal standard code to store alpha-numeric characters. In ASCII, A = 65 and B = 66 and so on. However, on a hardware level, computers store values with binary.

With that in mind, take the first step that every computer scientist takes on their digital journey and type "hello world" into the textbox below. You will see what your computer translates from binary into ASCII, and finally into English for human-readable text.

1.2

Hardware

source

An artefact found in a wreckage off the coast of the Greek island Antikythera is believed to be the first known analogue computer, built around 150 BC. It is a complex mathematical gear system used to trace astrological patterns and eclipses.

Calculating machines that perform specific tasks are not new. What is new about digital computers is that they are general purpose machines that manipulate symbols and solve any problem using a set of instructions or programs. They are also fast and efficient. Being digital, computers are deterministic. Understanding only "on" and "off", they do exactly as they are told.

In the early 19th century, a "computer" was a minimally skilled laborer who knew how to add and subtract. The industrial age was built on mass production using machines and the design of efficient linear systems or assembly lines. Many of the new methods of production and distribution involved complex calculations based on variable data. Tables of logarithms were used to process this data and human computers were hired to perform these calculations.

The definition of a computer remained the same until the end of the 19th century, when the industrial revolution gave rise to machines whose primary purpose was calculating.

Early Computing:

Crash Course Computer Science #1

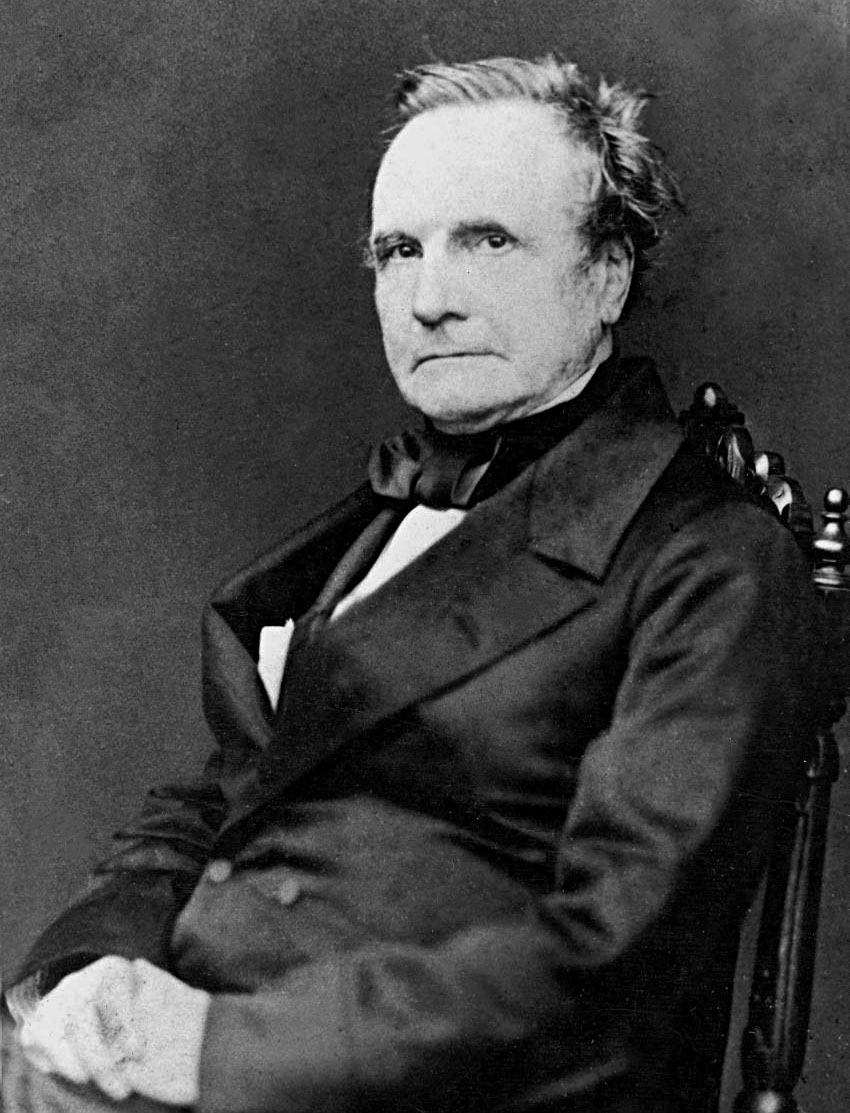

Charles Babbage

(1791 - 1871)

“As soon as an Analytical Engine exists, it will necessarily guide the future course of science” - Charles Babbage

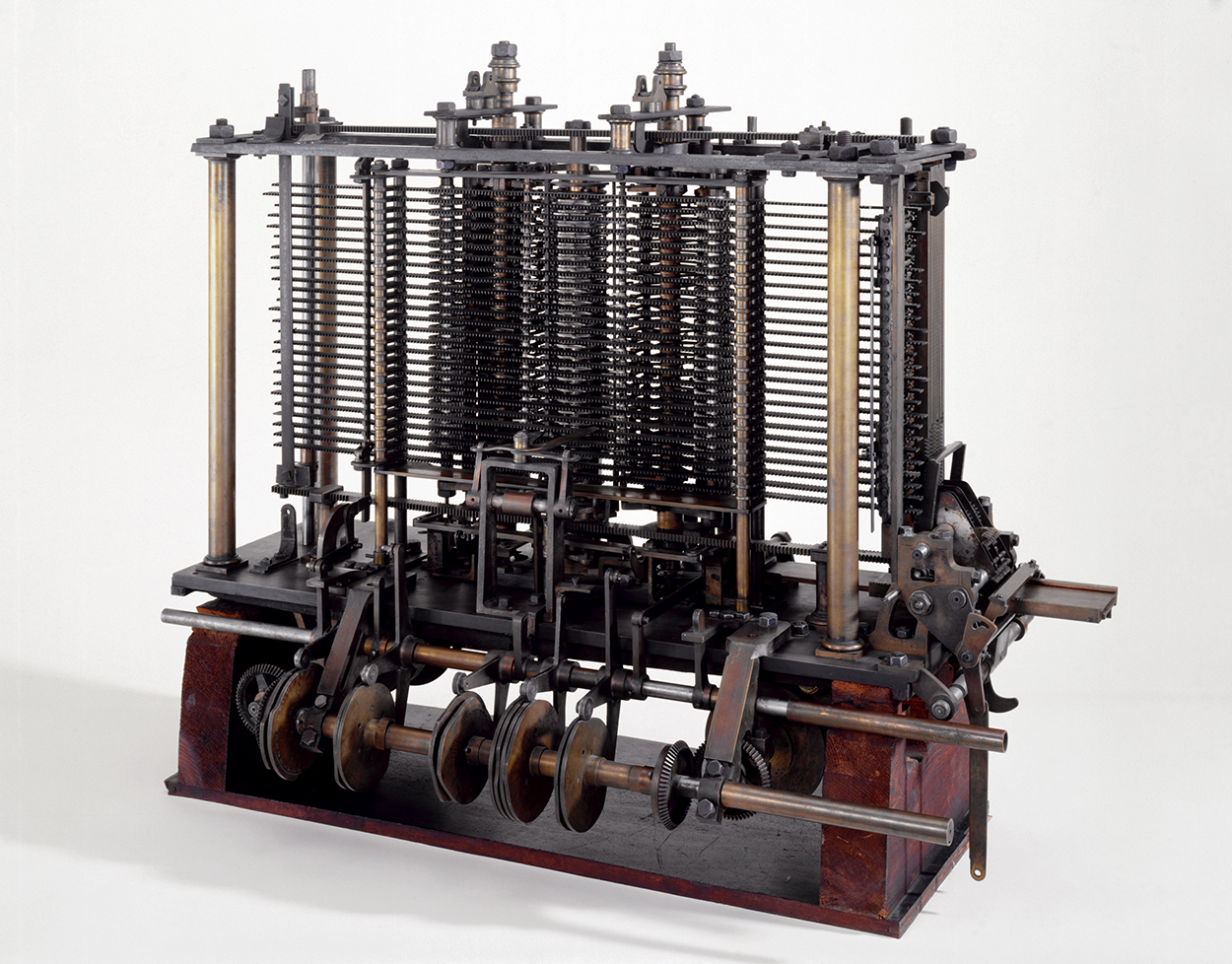

In 1812, a London mathematician and engineer named Charles Babbage was observing fallible human computers at their desks performing their calculations on logarithmic tables when it struck him that this step could be performed more efficiently, more reliably and more cheaply by a machine. But how? The challenge was to have a machine be able to calculate and store data. His first invention was a steam-powered machine that he called the Difference Engine. It could do simple math mechanically, but that was all it could do: one task at a time. A more useful calculating machine would be able to perform a variety of tasks simultaneously.

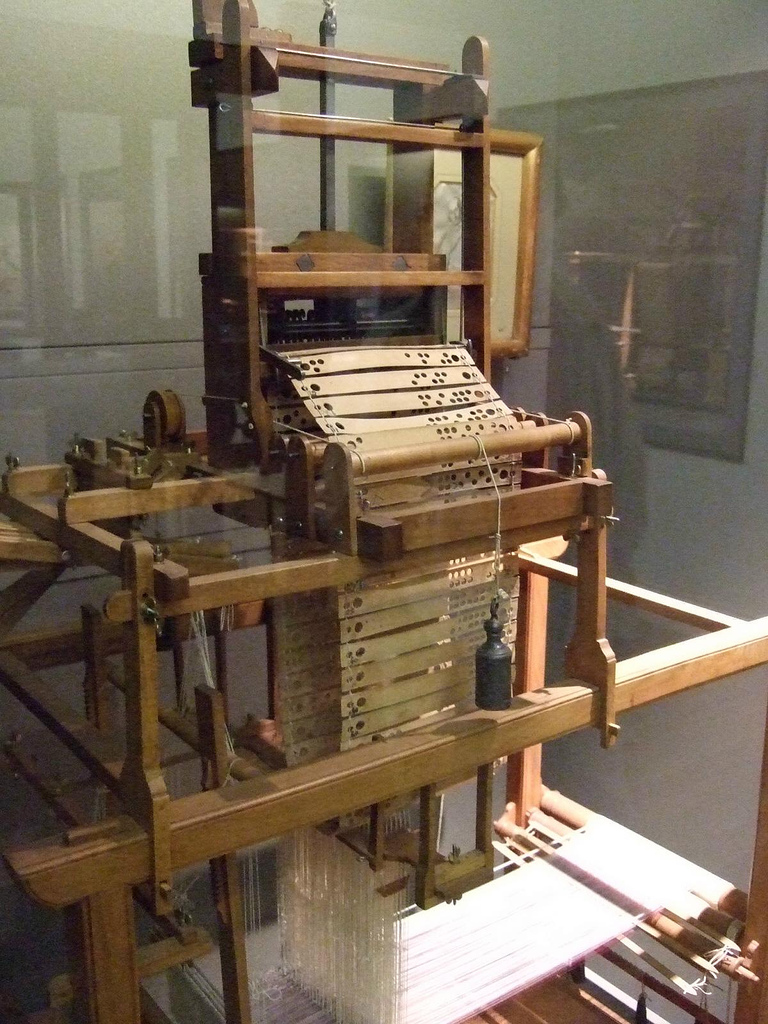

Babbage found inspiration in the very popular Jacquard loom, which used rectangular cards with rows of punched holes that stored specific patterns for weaving. These punch cards were used to "program" the loom to weave a variety of patterns. In 1832, way over budget, Charles Babbage presented his Difference Engine to the public. Most people were not impressed. It seemed too expensive and cumbersome for the meager results it produced.

Ada Lovelace (1815 - 1852)

“Supposing, for instance, that the fundamental relations of pitched sounds in the science of harmony and of musical composition were susceptible of such expression and adaptations, the engine might compose elaborate and scientific pieces of music of any degree of complexity or extent.”- From Ada Lovelace's translation and notes on Menabrea's “Sketch of the Analytical Engine”, 1843

Seventeen-year-old Ada Lovelace met Charles Babbage at a dinner party and became fascinated by his invention. Ada, daughter of the Romantic poet Lord Byron, was very skilled in math, but she also had a sensibility for art and poetry from her famous father. She seemed to understand intuitively that a machine that could perform logical calculations, that could take symbolic inputs and compute outputs, could also work with other kinds of symbols besides numbers. What could a computer do with words or music notes?, she wondered.

Although Ada Lovelace became Babbage’s publicist and partner, and was a visionary about the expansive role of the computer in art and culture, she died with very little recognition for her contributions. Because she understood the Difference Engine to be a general purpose machine that could take complex instructions (algorithms)to perform a variety of tasks, many today believe she was the first programmer. Her ideas also opened up the possibility for computers to collaborate with artists, musicians and poets. Despite being deterministic and precise, computers could be programmed for variabile and random processes, as we will see in Chapter 9.

In 1834, after the failure of his Difference Engine and inspired by Ada’s ideas, Babbage came up with a better invention. The Analytical Engine was to be general purpose computer. Although it was never built, it is believed to have launched the computer era.

Components of a Computer

Computers are getting faster, smaller and more powerful by the year. But for the time being at least, the basic architecture of how a computer functions has remained the same for 100 years: a steady current of electricity powers a central processing unit that performs tasks and stores data in memory. Todays computers run with the following components:

Power Supply:

An internal hardware component that supplies power to other components in a computer.

CPU/Processor:

CPU is the abbreviation for Central Processing Unit, and is mostly referred to simply as the processor. The processor is the brains of a computer, where most calculations take place.

Memory/RAM:

RAM is an acronym for Random Access Memory, a type of computer memory that can be accessed randomly; that is, any byte of memory can be accessed without touching the preceding bytes. RAM is the most common type of memory found in computers and other devices.

Hard Drive:

Sometimes abbreviated as HD, a hard drive is a "non-volatile" memory hardware device that permanently stores and retrieves information.

Video Card:

A video card connects to the motherboard of a computer system and generates output images to display. Video cards are also referred to as "graphics cards." Video cards include a processing unit, memory, a cooling mechanism and connections to a display device.

Motherboard:

One of the essential parts of a computer system. It holds together many of the important components of a computer, including the central processing unit (CPU), memory and connectors for input and output devices. The base of a motherboard consists of a very firm sheet of non-conductive material, typically some sort of rigid plastic. Thin layers of copper or aluminum foil, referred to as traces, are printed onto this sheet. These traces are very narrow and form the circuits between the various components. In addition to circuits, a motherboard contains a number of sockets and slots to connect other components.

The Graphical User Interface

The graphical user interface or GUI is an important integration of hardware and software as it determines how humans interact with a computer. The computer interface has evolved from text-based instructions on a keyboard to sophisticated visual metaphors (pointer, folder, trashcan) to help users perform simple tasks using a mouse or trackpad. GUIs were introduced in reaction to the perceived steep learning curve of command-line interfaces.

1.3

Software

A computer program is a collection of specific instructions for the computer to use to complete tasks, to interact with a user or to interact with other computers. Computer software holds these instructions or programs for the computer hardware.

Early Programming:

Crash Course Computer Science #10

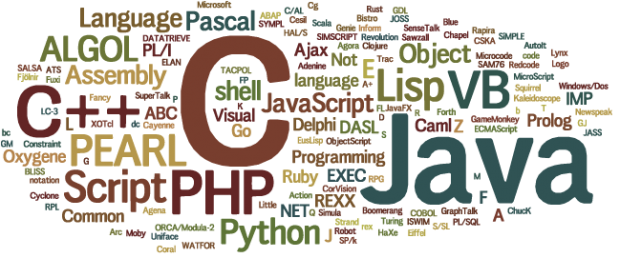

Programming languages are built from other programming languages and often work with the same essential programming concepts. New languages are developed to solve new problems for new hardware.

The first programmable computers required the programmers to write explicit instructions to directly manipulate the hardware of the computer using a low-level language. A low-level language has very little to no abstraction from machine code. Many programs today are mid-level or high-level languages, which means they involve greater abstraction and look more like natural language.

High-Level programming languages:

- Ruby: general-purpose language for buidling web applications, data analysis and prototyping

- JavaScript: client scripting language for creating web pages

- Perl: a general-purpose programming language developed for text manipulation and now used for system administration, web development, network programming, GUI development.

- Php: server-side, scripting language used for the development of web, e-commerce and database applications.

- Python: a general purpose and high level programming language for developing desktop GUI applications, websites and web applications

Mid-Level programming languages:

- Java: an object-oriented programming language easier than C++ that is used to create complete applications that may run on a single computer or be distributed among servers and clients in a network.

- C++: a general purpose, object-oriented language used to develop games, desktop apps and operating systems.

- C: a general-purpose, procedural computer programming that is used for everything from microcontrollers to operating systems.

Low-Level programming languages:

- Machine Code: usually written in binary, machine code is the lowest level of software. Other programming languages are translated into machine code so the computer can execute them

- Assembly: assembly language is used primarily for direct hardware manipulation, access to specialized processor instructions, or to address critical performance issues.

Computational Thinking

Computer programming involves writing specific instructions in a particuar syntax so that a computer can process data and solve problems. An ecommerce site will need to total a purchase, add tax and shipping, charge a card and then send an order for shipment and a receipt. To write a program that performs all of these task in the most efficient way possible involves using variables, loops (repeated actions), conditionals (if, then statements) and functions (complex sets of instructions). But before writing a single line of code, programmers use computation thinking to understand the parts of a problem and the sequence of steps to solve it. The four principles of computaional thinking are:- Decomposition: break down problem into small parts

- Pattern Recognition: find the similarities and patterns in the parts

- Abstraction: with the found patterns, remove the details that are inessential to solving the problem

- Algorithm Writing: based on abstractions, come up with a list of steps to solve problem

The First Programming Languages:

Crash Course Computer Science #11

1.4

Unit Exercise: Data Bending

Data Bending Exercise

- 1. Find an image using Google search and download it

- 2. Change the file name of the extension from .png or .jpg to .txt

- 3. Open the new file and observe the arrangement of alphanumeric symbols. This is code for the browser to display pixels in a frame.

- 4. Change some of this code. Search and replace one letter or number. But don’t change too much or you might break the image!

- 5. Change the file back to its original extensionL .png or .jpg

- 6. Open the file and observe the changes to the image when its underlying code is altered.

1.5

Glossary

1.6

Bibliography

“Ada Lovelace.” Wikipedia, 29 Aug. 2019. Wikipedia, https://en.wikipedia.org/w/index.php?title=Ada_Lovelace&oldid=913026966.

Boast, Robin. The Machine in the Ghost: Digitality and Its Consequences. 1 edition, Reaktion Books, 2017.

“Charles Babbage.” Wikipedia, 2 Sept. 2019. Wikipedia, https://en.wikipedia.org/w/index.php?title=Charles_Babbage&oldid=913720676.

Kernighan, Brian W. D Is for Digital: What a Well-Informed Person Should Know about Computers and Communications. 8/24/11 edition, CreateSpace Independent Publishing Platform, 2011.

Lister, Martin, et al. New Media: A Critical Introduction. 2 edition, Routledge, 2009.

Manovich, Lev. The Language of New Media. Reprint edition, The MIT Press, 2002.

Steiglitz, Ken. The Discrete Charm of the Machine: Why the World Became Digital. Princeton University Press, 2019.

Untangling the Tale of Ada Lovelace—Stephen Wolfram Blog. https://blog.stephenwolfram.com/2015/12/untangling-the-tale-of-ada-lovelace/. Accessed 17 June 2019.