Experimenting with Kinect, WiiMote, Falcon, and iPads in the MOVE Lab

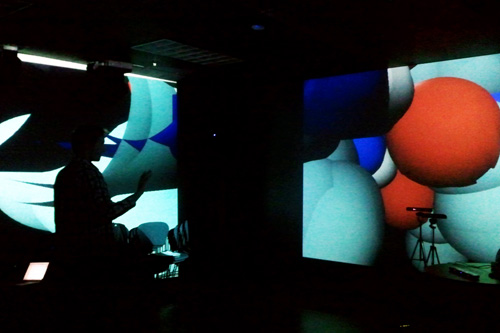

When I brought the MOVE Lab from Texas Woman’s University with me to Washington State University Vancouver in 2006 (with the support of my collaborator, multimedia performer and theorist Dr. Steve Gibson), I was using a proprietary motion tracking, sensor-based system, called GAMS (Gesture and Media System) created by our colleagues in Canada. GAMS allowed Steve and I to program the space as a 3D grid and, then, put media objects like music, video, animation, robotic lights, and fog in various locations in that grid. Using an infrared tracking device, we could move around the space and evoke media objects, producing “on the fly” live, multimedia performances and installations.

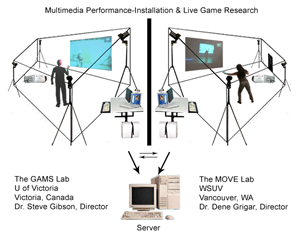

The system also allowed my lab to talk directly to Steve’s in at the University of Victoria. For example, when I evoked a light in my light, it would cause a light to move in his––and vice versa. Virtual DJ Net demonstrates this capability well. The project was part of Steve’s Canada Foundation for Innovation grant that sought to experiment with net-based media and high-speed internet. Other work we completed until the grant ended in 2008 included When Ghosts Will Die and the MINDful Play Environment.

The system also allowed my lab to talk directly to Steve’s in at the University of Victoria. For example, when I evoked a light in my light, it would cause a light to move in his––and vice versa. Virtual DJ Net demonstrates this capability well. The project was part of Steve’s Canada Foundation for Innovation grant that sought to experiment with net-based media and high-speed internet. Other work we completed until the grant ended in 2008 included When Ghosts Will Die and the MINDful Play Environment.

Fast forward to 2013. Steve took a position at the University of Newcastle in UK, and GAMS needed funding for a major update. Because sensor-based technologies had become common for gaming, Steve and our colleagues in Canada had turned their attention to using commercial gaming equipment for motion tracking, sensor-based projects and formed a company, Limbic Media, with Justin Love, Steve’s former student, a partner and VP of Operations. Justin began consulting with me in the spring with the idea that I would utilize in the MOVE Lab what Limbic Media was doing with Kinect and WiiMote––essentially converting it from GAMS to commercial gaming technology.

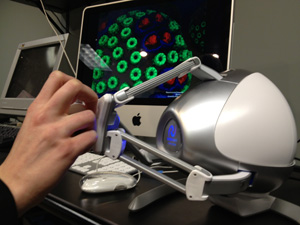

The first project with this new approach is “iSci: Interactive Technologies for Immersive Science,” a collaboration with my colleague, Dr. Alex Dimitrov (WSUV, Mathematics), that aims to produce an interactive immersive environment for teaching scientific concepts to students from middle school through college using by Kinect Game System and Wii Remote. We have conceptualized the project to include also haptic feedback and augmented reality, so we have brought in a Falcon Game Controller and AR software. To pull all of these elements together, we have also envisioned an app-based book created from HTML5 and CSS3 (and a little js) that would be read from an iPad (or other tablets) and include directions for interacting with the media, explanation of scientific information, and assessment activities. As such, our project envisions a mixed reality classroom experience and re-imagines the tools used for teaching, the learning environment in which learning takes place, and methods of instruction used to encourage the learning process.

The first project with this new approach is “iSci: Interactive Technologies for Immersive Science,” a collaboration with my colleague, Dr. Alex Dimitrov (WSUV, Mathematics), that aims to produce an interactive immersive environment for teaching scientific concepts to students from middle school through college using by Kinect Game System and Wii Remote. We have conceptualized the project to include also haptic feedback and augmented reality, so we have brought in a Falcon Game Controller and AR software. To pull all of these elements together, we have also envisioned an app-based book created from HTML5 and CSS3 (and a little js) that would be read from an iPad (or other tablets) and include directions for interacting with the media, explanation of scientific information, and assessment activities. As such, our project envisions a mixed reality classroom experience and re-imagines the tools used for teaching, the learning environment in which learning takes place, and methods of instruction used to encourage the learning process.

Having worked now with the new system for the past several months, I have to say that the differences between it and GAMS are significant. Most importantly, GAMS allows for precise gestures across a wide space while Kinect and WiiMote, for positioning within a small area. This means that I am limited in the movements I can make to evoke media. Second, GAMS only tracks infrared signals, so only those performers holding a tracker are “seen” by the system, while Kinect tracks everyone in the space resulting in a slow down in the computing and a loss of performance of the media. I will be able to gage the positive aspects of Kinect (inexpensive, easy to travel, simple to program) after more time. All I can say right now is that as a multimedia performer, I miss the robustness of GAMS. I will put some brief videos in a later post to show what I mean.

We are in the throes of developing iSci and plan to have a working prototype by the end of August. To date, we have been able to get the Kinect and WiiMote to work with the VMD. Projecting the 3D model of a salt molecule on three walls of the lab, we can manipulate the molecule with the technology. The Falcon allows us to feel the force of the molecules, and the iPad makes it possible to interact with salt crystals through AR. We have several CMDC alums and students working with us: Greg, of course, serves as our research and tech assistant; Aaron Wintersong is creating all of the animations for the AR and iPad; and Jason Cook is programming the AR for us. Alex’s daughter Marina, who just graduated from high school and is headed off to Stanford in the fall to study engineering, is working on the content.

Following iSci, I plan to convert my GAMS based 3D multimedia performances, “Things of Day and Dream” and “Rhapsody Room,” to the new system with the idea that I can travel easily with the equipment for live performances. At this point I may have more to say about leaving GAMS behind.

The MOVE (Motion Tracking, Virtual Environment) Lab is explores combining sensor-based physical computing with virtual and augmented reality technologies for education, business, communication, and art.

Director: Dr. Dene Grigar, The Creative Media & Digital Culture Program at Washington State University Vancouver.

Research Assistant: Greg Philbrook, B.A., CMDC ProgramWe were pleased to supervise the research of Catlin Gabel senior Marina Dimitrov, who undertook AR as the topic of her senior project. Marina will attend Stanford University in the fall to study Engineering. A summary of her project is online on her blog.

Scholarly Articles

Grigar, Dene. “Hyperlinking in 3D Multimedia Performances.” Beyond the Screen: Transformations of Literary Structures, Interfaces and Genres. Ed. Jörgen Schäfer and Peter Gendolla. Bielefeld, Germany: Transaction Publishers, March 2010.

Grigar, Dene. “Motion Tracking Technology, Telepresence, and Collaboration”. With Steve Gibson. Hyperrhiz 03, Summer 2007.

Grigar, Dene & Steve Gibson. "Found in Space: The Mindful Play Environment Is Born LabLit.

Press & Blog Posts

Dimitrov, Marina. "Hanging OUt in the Holodeck". 11 May 2013.

"Virtual Haunting: WSUV Research Takes Video Gaming to the Next Level". The Columbian. 5 Jun 2011.

"All Hands on the Holodeck. The Columbian. 16 Apr. 2007.

"Dene Grigar Shows Off MOVE Lab at WSU Vancouver. The Columbian. 16 Apr. 2007.

Virtual DJ. "Networked Music Review." Turbulence. 2 Oct. 2007.